Chapter 8 Probability & Simulation

8.1 Learning Objectives

8.1.1 Basic

- Generate and plot data randomly sampled from common distributions (video)

- Generate related variables from a multivariate distribution (video)

- Define the following statistical terms:

- p-value

- alpha

- power

- smallest effect size of interest (SESOI)

- false positive (type I error)

- false negative (type II error)

- confidence interval (CI)

- Test sampled distributions against a null hypothesis (video)

- exact binomial test

- t-test (1-sample, independent samples, paired samples)

- correlation (pearson, kendall and spearman)

- Calculate power using iteration and a sampling function

8.1.2 Intermediate

- Calculate the minimum sample size for a specific power level and design

8.2 Resources

- Stub for this lesson

- Distribution Shiny App (or run

dataskills::app("simulate") - Simulation tutorials

- Chapter 21: Iteration of R for Data Science

- Improving your statistical inferences on Coursera (week 1)

- Faux package for data simulation

- Simulation-Based Power-Analysis for Factorial ANOVA Designs (Lakens and Caldwell 2019)

- Understanding mixed effects models through data simulation (DeBruine and Barr 2019)

8.3 Setup

# libraries needed for these examples

library(tidyverse)

library(plotly)

library(faux)

set.seed(8675309) # makes sure random numbers are reproducibleSimulating data is a very powerful way to test your understanding of statistical concepts. We are going to use simulations to learn the basics of probability.

8.4 Univariate Distributions

First, we need to understand some different ways data might be distributed and how to simulate data from these distributions. A univariate distribution is the distribution of a single variable.

8.4.1 Uniform Distribution

The uniform distribution is the simplest distribution. All numbers in the range have an equal probability of being sampled.

Take a minute to think of things in your own research that are uniformly distributed.

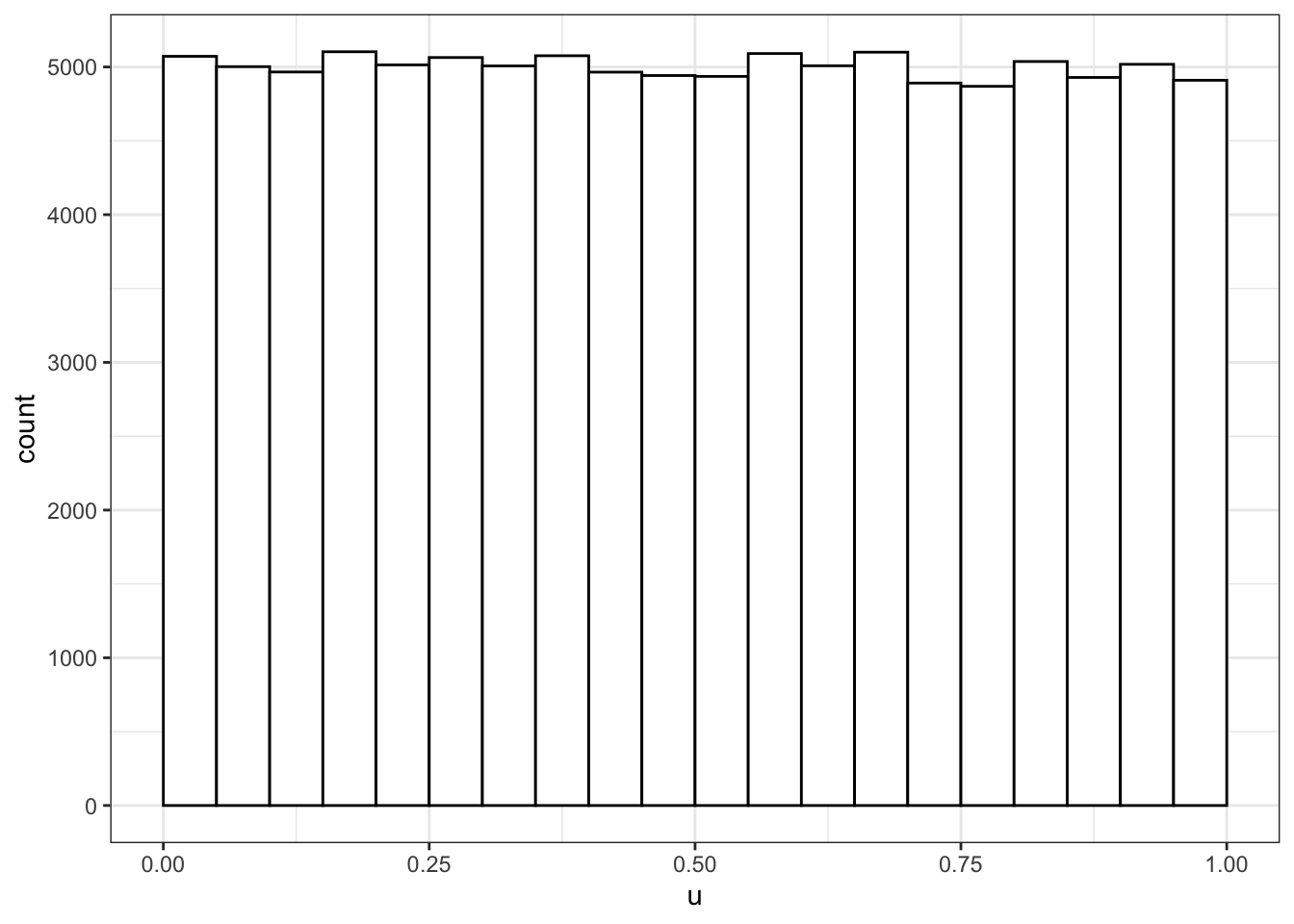

8.4.1.1 Continuous distribution

runif(n, min=0, max=1)

Use runif() to sample from a continuous uniform distribution.

u <- runif(100000, min = 0, max = 1)

# plot to visualise

ggplot() +

geom_histogram(aes(u), binwidth = 0.05, boundary = 0,

fill = "white", colour = "black")

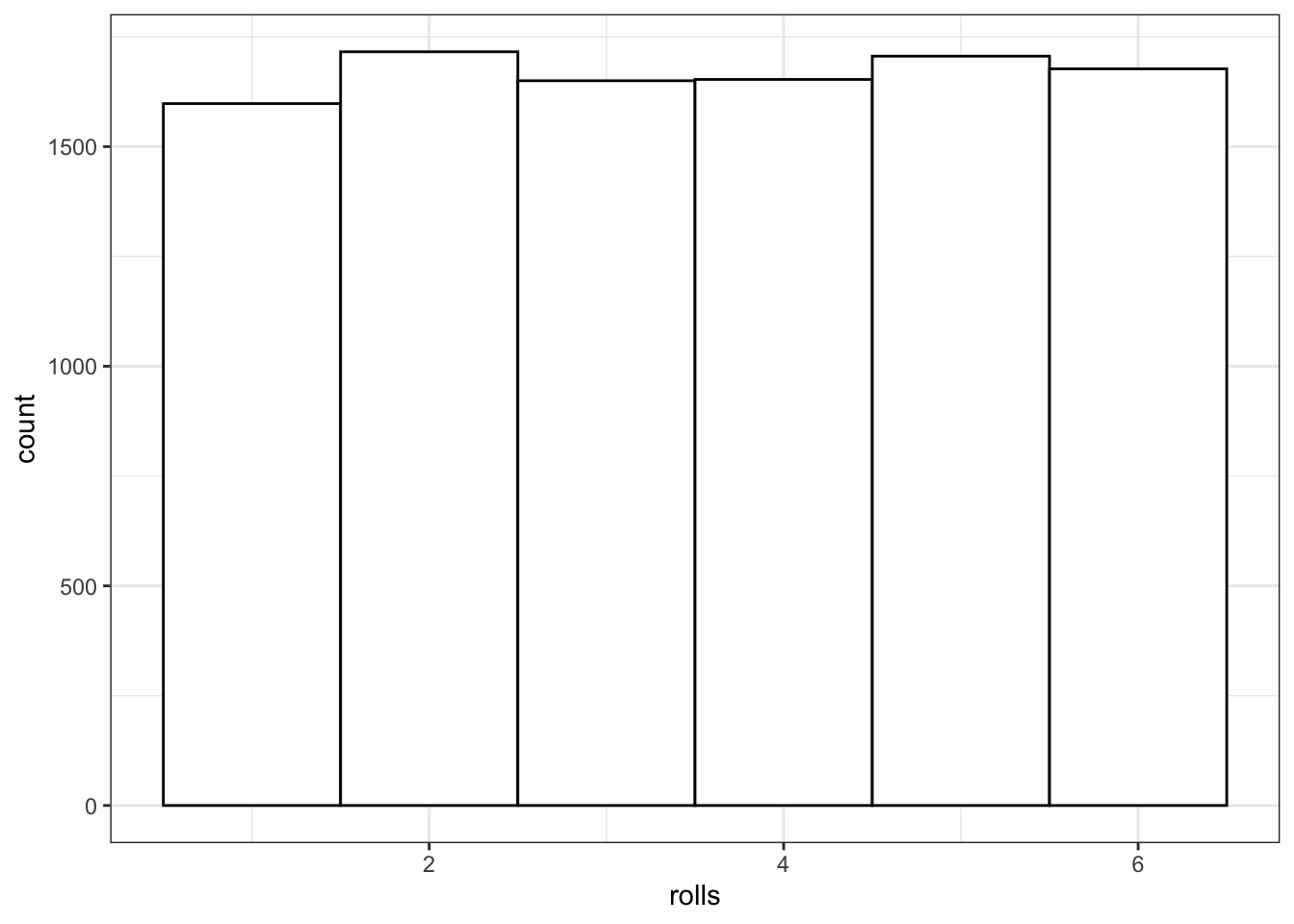

8.4.1.2 Discrete

sample(x, size, replace = FALSE, prob = NULL)

Use sample() to sample from a discrete distribution.

You can use sample() to simulate events like rolling dice or choosing from a deck of cards. The code below simulates rolling a 6-sided die 10000 times. We set replace to TRUE so that each event is independent. See what happens if you set replace to FALSE.

rolls <- sample(1:6, 10000, replace = TRUE)

# plot the results

ggplot() +

geom_histogram(aes(rolls), binwidth = 1,

fill = "white", color = "black")

Figure 8.1: Distribution of dice rolls.

You can also use sample to sample from a list of named outcomes.

## [1] "cat" "cat" "cat" "cat" "ferret" "dog" "bird" "cat"

## [9] "dog" "fish"Ferrets are a much less common pet than cats and dogs, so our sample isn’t very realistic. You can set the probabilities of each item in the list with the prob argument.

pet_types <- c("cat", "dog", "ferret", "bird", "fish")

pet_prob <- c(0.3, 0.4, 0.1, 0.1, 0.1)

sample(pet_types, 10, replace = TRUE, prob = pet_prob)## [1] "fish" "dog" "cat" "dog" "cat" "dog" "fish" "dog" "cat" "fish"8.4.2 Binomial Distribution

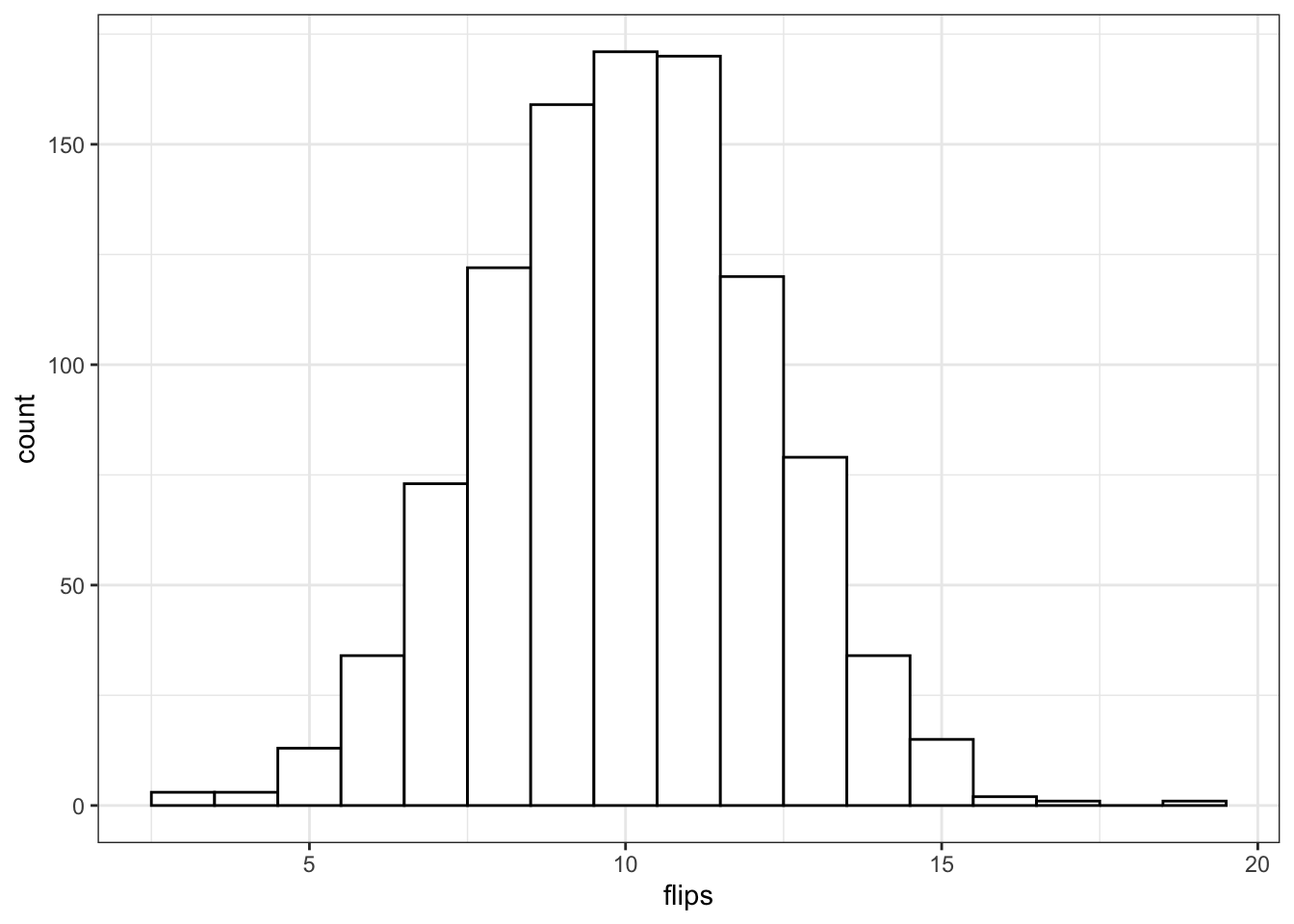

The binomial distribution is useful for modelling binary data, where each observation can have one of two outcomes, like success/failure, yes/no or head/tails.

rbinom(n, size, prob)

The rbinom function will generate a random binomial distribution.

n= number of observationssize= number of trialsprob= probability of success on each trial

Coin flips are a typical example of a binomial distribution, where we can assign heads to 1 and tails to 0.

## [1] 1 1 1 0 1 1 0 1 0 0 1 1 1 0 0 0 1 0 0 0## [1] 1 1 1 0 1 0 1 1 1 0 1 1 1 0 0 1 1 1 1 1You can generate the total number of heads in 1 set of 20 coin flips by setting size to 20 and n to 1.

## [1] 13You can generate more sets of 20 coin flips by increasing the n.

## [1] 10 14 11 7 11 13 6 10 9 9You should always check your randomly generated data to check that it makes sense. For large samples, it’s easiest to do that graphically. A histogram is usually the best choice for plotting binomial data.

flips <- rbinom(1000, 20, 0.5)

ggplot() +

geom_histogram(

aes(flips),

binwidth = 1,

fill = "white",

color = "black"

)

Run the simulation above several times, noting how the histogram changes. Try changing the values of n, size, and prob.

8.4.3 Normal Distribution

rnorm(n, mean, sd)

We can simulate a normal distribution of size n if we know the mean and standard deviation (sd). A density plot is usually the best way to visualise this type of data if your n is large.

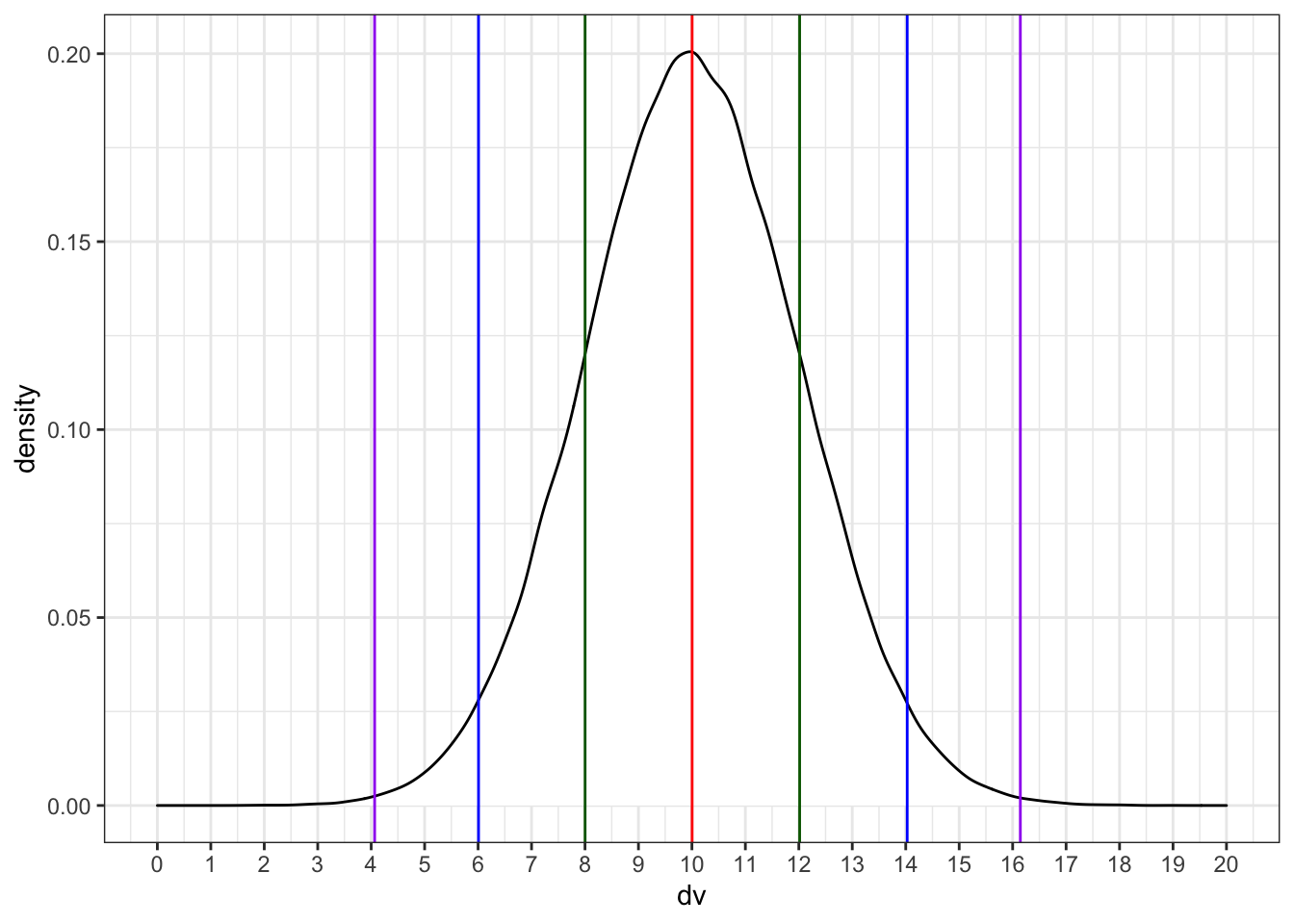

dv <- rnorm(1e5, 10, 2)

# proportions of normally-distributed data

# within 1, 2, or 3 SD of the mean

sd1 <- .6827

sd2 <- .9545

sd3 <- .9973

ggplot() +

geom_density(aes(dv), fill = "white") +

geom_vline(xintercept = mean(dv), color = "red") +

geom_vline(xintercept = quantile(dv, .5 - sd1/2), color = "darkgreen") +

geom_vline(xintercept = quantile(dv, .5 + sd1/2), color = "darkgreen") +

geom_vline(xintercept = quantile(dv, .5 - sd2/2), color = "blue") +

geom_vline(xintercept = quantile(dv, .5 + sd2/2), color = "blue") +

geom_vline(xintercept = quantile(dv, .5 - sd3/2), color = "purple") +

geom_vline(xintercept = quantile(dv, .5 + sd3/2), color = "purple") +

scale_x_continuous(

limits = c(0,20),

breaks = seq(0,20)

)

Run the simulation above several times, noting how the density plot changes. What do the vertical lines represent? Try changing the values of n, mean, and sd.

8.4.4 Poisson Distribution

The Poisson distribution is useful for modelling events, like how many times something happens over a unit of time, as long as the events are independent (e.g., an event having happened in one time period doesn’t make it more or less likely to happen in the next).

rpois(n, lambda)

The rpois function will generate a random Poisson distribution.

n= number of observationslambda= the mean number of events per observation

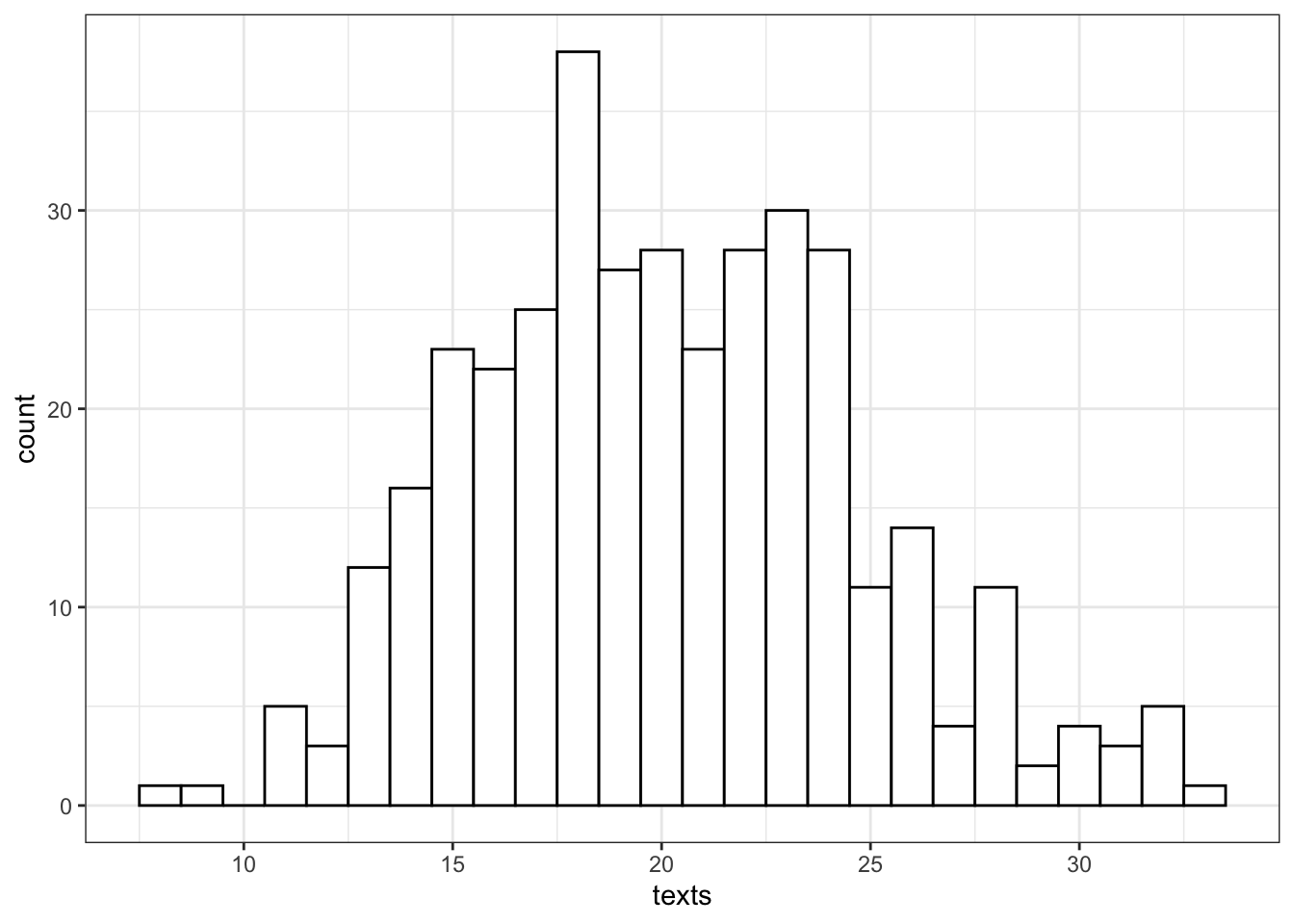

Let’s say we want to model how many texts you get each day for a whole. You know that you get an average of 20 texts per day. So we set n = 365 and lambda = 20. Lambda is a parameter that describes the Poisson distribution, just like mean and standard deviation are parameters that describe the normal distribution.

texts <- rpois(n = 365, lambda = 20)

ggplot() +

geom_histogram(

aes(texts),

binwidth = 1,

fill = "white",

color = "black"

)

So we can see that over a year, you’re unlikely to get fewer than 5 texts in a day, or more than 35 (although it’s not impossible).

8.5 Multivariate Distributions

8.5.1 Bivariate Normal

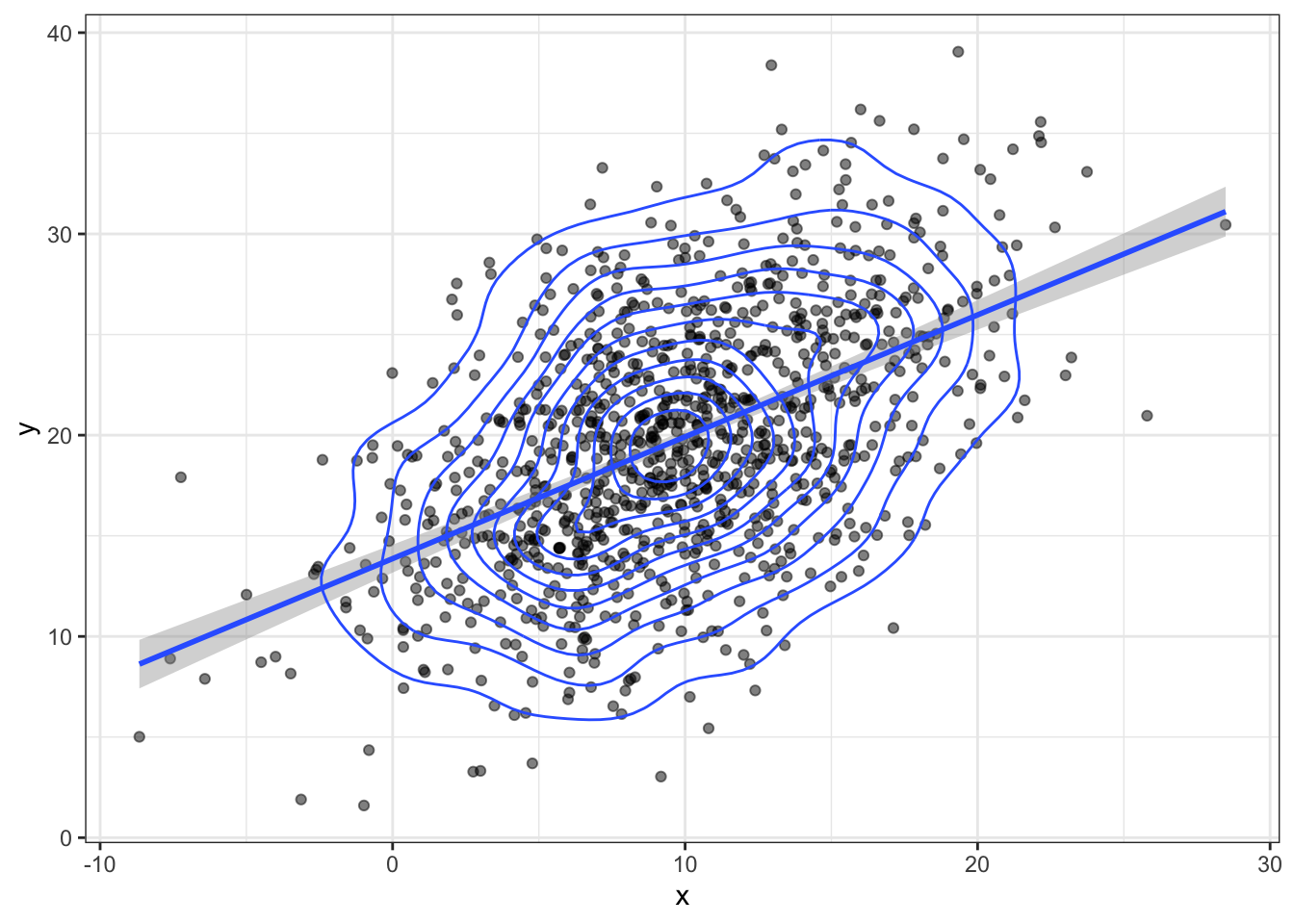

A bivariate normal distribution is two normally distributed vectors that have a specified relationship, or correlation to each other.

What if we want to sample from a population with specific relationships between variables? We can sample from a bivariate normal distribution using mvrnorm() from the MASS package.

Don’t load MASS with the library() function because it will create a conflict with the select() function from dplyr and you will always need to preface it with dplyr::. Just use MASS::mvrnorm().

You need to know how many observations you want to simulate (n) the means of the two variables (mu) and you need to calculate a covariance matrix (sigma) from the correlation between the variables (rho) and their standard deviations (sd).

n <- 1000 # number of random samples

# name the mu values to give the resulting columns names

mu <- c(x = 10, y = 20) # the means of the samples

sd <- c(5, 6) # the SDs of the samples

rho <- 0.5 # population correlation between the two variables

# correlation matrix

cor_mat <- matrix(c( 1, rho,

rho, 1), 2)

# create the covariance matrix

sigma <- (sd %*% t(sd)) * cor_mat

# sample from bivariate normal distribution

bvn <- MASS::mvrnorm(n, mu, sigma) Plot your sampled variables to check everything worked like you expect. It’s easiest to convert the output of mvnorm into a tibble in order to use it in ggplot.

bvn %>%

as_tibble() %>%

ggplot(aes(x, y)) +

geom_point(alpha = 0.5) +

geom_smooth(method = "lm") +

geom_density2d()## `geom_smooth()` using formula 'y ~ x'

8.5.2 Multivariate Normal

You can generate more than 2 correlated variables, but it gets a little trickier to create the correlation matrix.

n <- 200 # number of random samples

mu <- c(x = 10, y = 20, z = 30) # the means of the samples

sd <- c(8, 9, 10) # the SDs of the samples

rho1_2 <- 0.5 # correlation between x and y

rho1_3 <- 0 # correlation between x and z

rho2_3 <- 0.7 # correlation between y and z

# correlation matrix

cor_mat <- matrix(c( 1, rho1_2, rho1_3,

rho1_2, 1, rho2_3,

rho1_3, rho2_3, 1), 3)

sigma <- (sd %*% t(sd)) * cor_mat

bvn3 <- MASS::mvrnorm(n, mu, sigma)

cor(bvn3) # check correlation matrix## x y z

## x 1.0000000 0.5896674 0.1513108

## y 0.5896674 1.0000000 0.7468737

## z 0.1513108 0.7468737 1.0000000You can use the plotly library to make a 3D graph.

#set up the marker style

marker_style = list(

color = "#ff0000",

line = list(

color = "#444",

width = 1

),

opacity = 0.5,

size = 5

)

# convert bvn3 to a tibble, plot and add markers

bvn3 %>%

as_tibble() %>%

plot_ly(x = ~x, y = ~y, z = ~z, marker = marker_style) %>%

add_markers()## Warning: `arrange_()` is deprecated as of dplyr 0.7.0.

## Please use `arrange()` instead.

## See vignette('programming') for more help

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_warnings()` to see where this warning was generated.8.5.3 Faux

Alternatively, you can use the package faux to generate any number of correlated variables. It also has a function for checking the parameters of your new simulated data (check_sim_stats()).

bvn3 <- rnorm_multi(

n = n,

vars = 3,

mu = mu,

sd = sd,

r = c(rho1_2, rho1_3, rho2_3),

varnames = c("x", "y", "z")

)

check_sim_stats(bvn3)## n var x y z mean sd

## 1 200 x 1.00 0.54 0.10 10.35 7.66

## 2 200 y 0.54 1.00 0.67 20.01 8.77

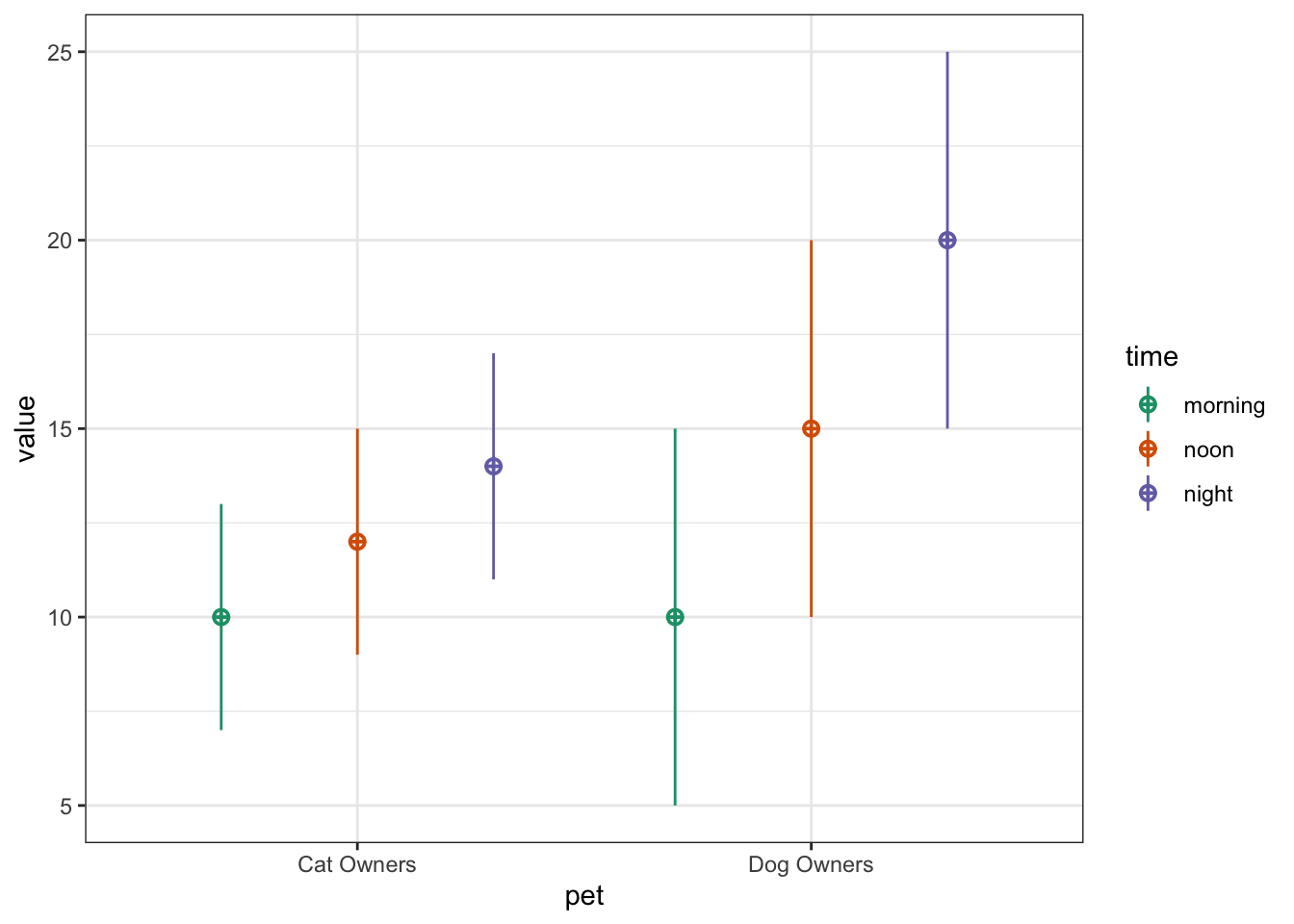

## 3 200 z 0.10 0.67 1.00 30.37 9.59You can also use faux to simulate data for factorial designs. Set up the between-subject and within-subject factors as lists with the levels as (named) vectors. Means and standard deviations can be included as vectors or data frames. The function calculates sigma for you, structures your dataset, and outputs a plot of the design.

b <- list(pet = c(cat = "Cat Owners",

dog = "Dog Owners"))

w <- list(time = c("morning",

"noon",

"night"))

mu <- data.frame(

cat = c(10, 12, 14),

dog = c(10, 15, 20),

row.names = w$time

)

sd <- c(3, 3, 3, 5, 5, 5)

pet_data <- sim_design(

within = w,

between = b,

n = 100,

mu = mu,

sd = sd,

r = .5)

You can use the check_sim_stats() function, but you need to set the argument between to a vector of all the between-subject factor columns.

## pet n var morning noon night mean sd

## 1 cat 100 morning 1.00 0.57 0.51 10.62 3.48

## 2 cat 100 night 0.51 0.59 1.00 14.61 3.14

## 3 cat 100 noon 0.57 1.00 0.59 12.44 3.01

## 4 dog 100 morning 1.00 0.55 0.50 9.44 4.92

## 5 dog 100 night 0.50 0.48 1.00 19.42 5.36

## 6 dog 100 noon 0.55 1.00 0.48 14.18 5.90See the faux website for more detailed tutorials.

8.6 Statistical terms

Let’s review some important statistical terms before we review tests of distributions.

8.6.1 Effect

The effect is some measure of your data. This will depend on the type of data you have and the type of statistical test you are using. For example, if you flipped a coin 100 times and it landed heads 66 times, the effect would be 66/100. You can then use the exact binomial test to compare this effect to the null effect you would expect from a fair coin (50/100) or to any other effect you choose. The effect size refers to the difference between the effect in your data and the null effect (usually a chance value).

8.6.2 P-value

The p-value of a test is the probability of seeing an effect at least as extreme as what you have, if the real effect was the value you are testing against (e.g., a null effect). So if you used a binomial test to test against a chance probability of 1/6 (e.g., the probability of rolling 1 with a 6-sided die), then a p-value of 0.17 means that you could expect to see effects at least as extreme as your data 17% of the time just by chance alone.

8.6.3 Alpha

If you are using null hypothesis significance testing (NHST), then you need to decide on a cutoff value (alpha) for making a decision to reject the null hypothesis. We call p-values below the alpha cutoff significant. In psychology, alpha is traditionally set at 0.05, but there are good arguments for setting a different criterion in some circumstances.

8.6.4 False Positive/Negative

The probability that a test concludes there is an effect when there is really no effect (e.g., concludes a fair coin is biased) is called the false positive rate (or Type I Error Rate). The alpha is the false positive rate we accept for a test. The probability that a test concludes there is no effect when there really is one (e.g., concludes a biased coin is fair) is called the false negative rate (or Type II Error Rate). The beta is the false negative rate we accept for a test.

The false positive rate is not the overall probability of getting a false positive, but the probability of a false positive under the null hypothesis. Similarly, the false negative rate is the probability of a false negative under the alternative hypothesis. Unless we know the probability that we are testing a null effect, we can’t say anything about the overall probability of false positives or negatives. If 100% of the hypotheses we test are false, then all significant effects are false positives, but if all of the hypotheses we test are true, then all of the positives are true positives and the overall false positive rate is 0.

8.6.5 Power and SESOI

Power is equal to 1 minus beta (i.e., the true positive rate), and depends on the effect size, how many samples we take (n), and what we set alpha to. For any test, if you specify all but one of these values, you can calculate the last. The effect size you use in power calculations should be the smallest effect size of interest (SESOI). See (Lakens, Scheel, and Isager 2018)(https://doi.org/10.1177/2515245918770963) for a tutorial on methods for choosing an SESOI.

Let’s say you want to be able to detect at least a 15% difference from chance (50%) in a coin’s fairness, and you want your test to have a 5% chance of false positives and a 10% chance of false negatives. What are the following values?

- alpha =

- beta =

- false positive rate =

- false negative rate =

- power =

- SESOI =

8.6.6 Confidence Intervals

The confidence interval is a range around some value (such as a mean) that has some probability of containing the parameter, if you repeated the process many times. Traditionally in psychology, we use 95% confidence intervals, but you can calculate CIs for any percentage.

A 95% CI does not mean that there is a 95% probability that the true mean lies within this range, but that, if you repeated the study many times and calculated the CI this same way every time, you’d expect the true mean to be inside the CI in 95% of the studies. This seems like a subtle distinction, but can lead to some misunderstandings. See (Morey et al. 2016)(https://link.springer.com/article/10.3758/s13423-015-0947-8) for more detailed discussion.

8.7 Tests

8.7.1 Exact binomial test

binom.test(x, n, p)

You can test a binomial distribution against a specific probability using the exact binomial test.

x= the number of successesn= the number of trialsp= hypothesised probability of success

Here we can test a series of 10 coin flips from a fair coin and a biased coin against the hypothesised probability of 0.5 (even odds).

n <- 10

fair_coin <- rbinom(1, n, 0.5)

biased_coin <- rbinom(1, n, 0.6)

binom.test(fair_coin, n, p = 0.5)

binom.test(biased_coin, n, p = 0.5)##

## Exact binomial test

##

## data: fair_coin and n

## number of successes = 6, number of trials = 10, p-value = 0.7539

## alternative hypothesis: true probability of success is not equal to 0.5

## 95 percent confidence interval:

## 0.2623781 0.8784477

## sample estimates:

## probability of success

## 0.6

##

##

## Exact binomial test

##

## data: biased_coin and n

## number of successes = 8, number of trials = 10, p-value = 0.1094

## alternative hypothesis: true probability of success is not equal to 0.5

## 95 percent confidence interval:

## 0.4439045 0.9747893

## sample estimates:

## probability of success

## 0.8Run the code above several times, noting the p-values for the fair and biased coins. Alternatively, you can simulate coin flips online and build up a graph of results and p-values.

- How does the p-value vary for the fair and biased coins?

- What happens to the confidence intervals if you increase n from 10 to 100?

- What criterion would you use to tell if the observed data indicate the coin is fair or biased?

- How often do you conclude the fair coin is biased (false positives)?

- How often do you conclude the biased coin is fair (false negatives)?

8.7.1.1 Sampling function

To estimate these rates, we need to repeat the sampling above many times. A function is ideal for repeating the exact same procedure over and over. Set the arguments of the function to variables that you might want to change. Here, we will want to estimate power for:

- different sample sizes (

n) - different effects (

bias) - different hypothesised probabilities (

p, defaults to 0.5)

sim_binom_test <- function(n, bias, p = 0.5) {

# simulate 1 coin flip n times with the specified bias

coin <- rbinom(1, n, bias)

# run a binomial test on the simulated data for the specified p

btest <- binom.test(coin, n, p)

# return the p-value of this test

btest$p.value

}Once you’ve created your function, test it a few times, changing the values.

## [1] 0.13321068.7.1.2 Calculate power

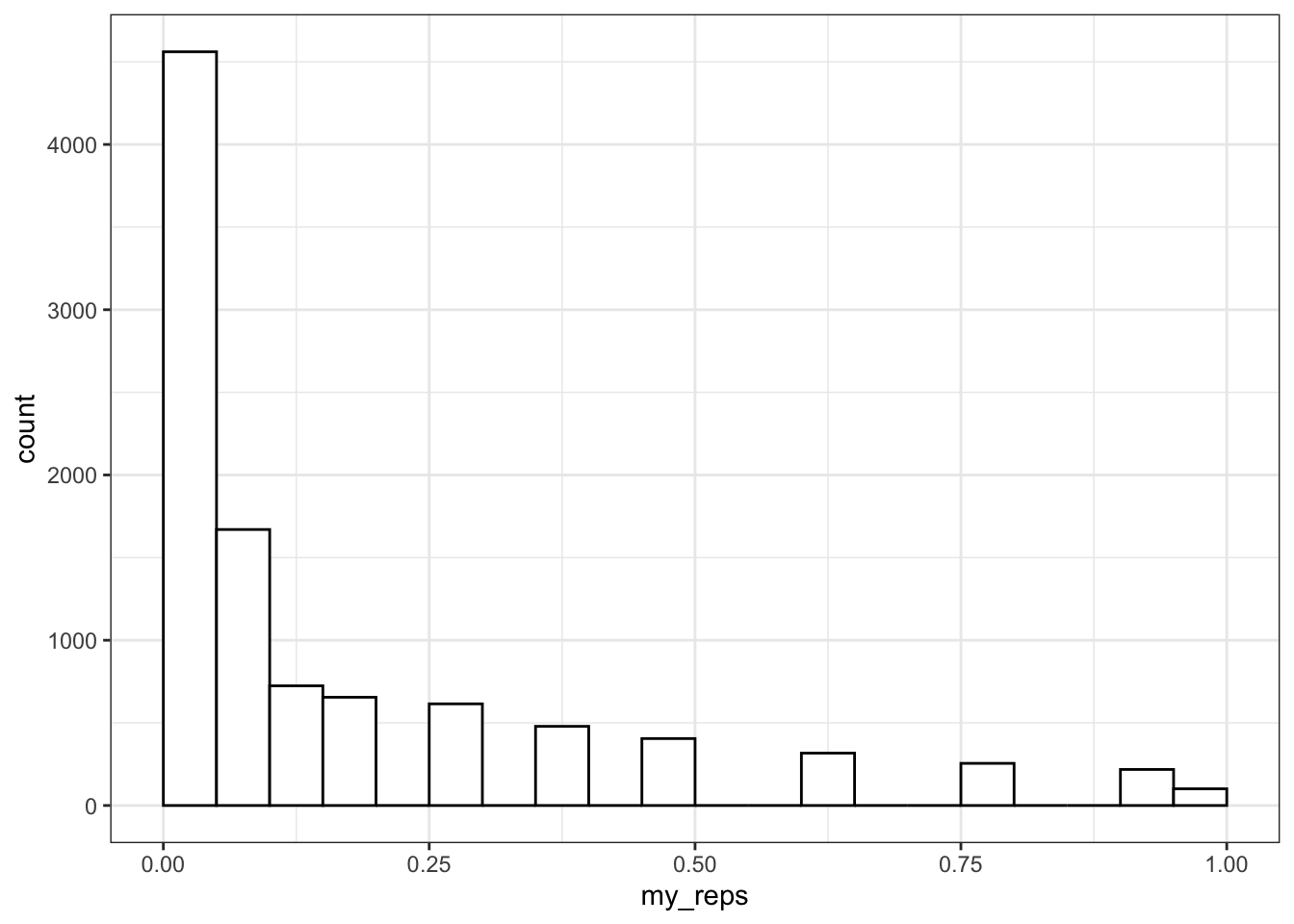

Then you can use the replicate() function to run it many times and save all the output values. You can calculate the power of your analysis by checking the proportion of your simulated analyses that have a p-value less than your alpha (the probability of rejecting the null hypothesis when the null hypothesis is true).

my_reps <- replicate(1e4, sim_binom_test(100, 0.6))

alpha <- 0.05 # this does not always have to be 0.05

mean(my_reps < alpha)## [1] 0.4561

1e4 is just scientific notation for a 1 followed by 4 zeros (10000). When you’re running simulations, you usually want to run a lot of them. It’s a pain to keep track of whether you’ve typed 5 or 6 zeros (100000 vs 1000000) and this will change your running time by an order of magnitude.

You can plot the distribution of p-values.

ggplot() +

geom_histogram(

aes(my_reps),

binwidth = 0.05,

boundary = 0,

fill = "white",

color = "black"

)

8.7.2 T-test

t.test(x, y, alternative, mu, paired)

Use a t-test to compare the mean of one distribution to a null hypothesis (one-sample t-test), compare the means of two samples (independent-samples t-test), or compare pairs of values (paired-samples t-test).

You can run a one-sample t-test comparing the mean of your data to mu. Here is a simulated distribution with a mean of 0.5 and an SD of 1, creating an effect size of 0.5 SD when tested against a mu of 0. Run the simulation a few times to see how often the t-test returns a significant p-value (or run it in the shiny app).

##

## One Sample t-test

##

## data: sim_norm

## t = 6.2874, df = 99, p-value = 8.758e-09

## alternative hypothesis: true mean is not equal to 0

## 95 percent confidence interval:

## 0.4049912 0.7784761

## sample estimates:

## mean of x

## 0.5917337Run an independent-samples t-test by comparing two lists of values.

##

## Welch Two Sample t-test

##

## data: a and b

## t = -1.8061, df = 197.94, p-value = 0.07243

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -0.54825320 0.02408469

## sample estimates:

## mean of x mean of y

## 0.4585985 0.7206828

The paired argument defaults to FALSE, but it’s good practice to always explicitly set it so you are never confused about what type of test you are performing.

8.7.2.1 Sampling function

We can use the names() function to find out the names of all the t.test parameters and use this to just get one type of data, like the test statistic (e.g., t-value).

## [1] "statistic" "parameter" "p.value" "conf.int" "estimate"

## [6] "null.value" "stderr" "alternative" "method" "data.name"

## t

## -1.806051If you want to run the simulation many times and record information each time, first you need to turn your simulation into a function.

sim_t_ind <- function(n, m1, sd1, m2, sd2) {

# simulate v1

v1 <- rnorm(n, m1, sd1)

#simulate v2

v2 <- rnorm(n, m2, sd2)

# compare using an independent samples t-test

t_ind <- t.test(v1, v2, paired = FALSE)

# return the p-value

return(t_ind$p.value)

}Run it a few times to check that it gives you sensible values.

## [1] 0.3625218.7.2.2 Calculate power

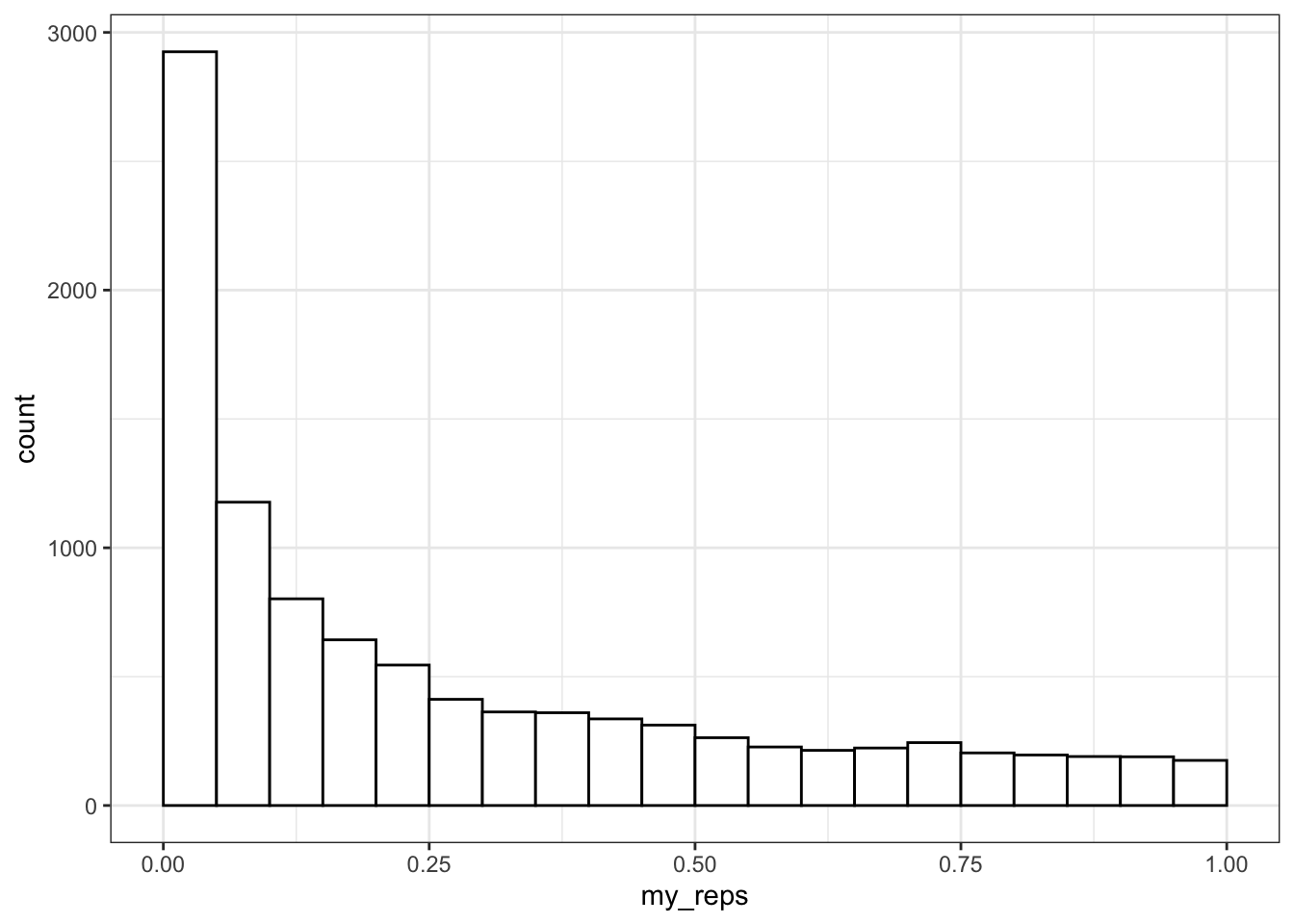

Now replicate the simulation 1000 times.

my_reps <- replicate(1e4, sim_t_ind(100, 0.7, 1, 0.5, 1))

alpha <- 0.05

power <- mean(my_reps < alpha)

power## [1] 0.2925Run the code above several times. How much does the power value fluctuate? How many replications do you need to run to get a reliable estimate of power?

Compare your power estimate from simluation to a power calculation using power.t.test(). Here, delta is the difference between m1 and m2 above.

##

## Two-sample t test power calculation

##

## n = 100

## delta = 0.2

## sd = 1

## sig.level = 0.05

## power = 0.2902664

## alternative = two.sided

##

## NOTE: n is number in *each* groupYou can plot the distribution of p-values.

ggplot() +

geom_histogram(

aes(my_reps),

binwidth = 0.05,

boundary = 0,

fill = "white",

color = "black"

)

What do you think the distribution of p-values is when there is no effect (i.e., the means are identical)? Check this yourself.

Make sure the boundary argument is set to 0 for p-value histograms. See what happens with a null effect if boundary is not set.

8.7.3 Correlation

You can test if continuous variables are related to each other using the cor() function. Let’s use rnorm_multi() to make a quick table of correlated values.

## [1] -0.4960331

Set n to a large number like 1e6 so that the correlations are less affected by chance. Change the value of the mean for a, x, or y. Does it change the correlation between x and y? What happens when you increase or decrease the sd? Can you work out any rules here?

cor() defaults to Pearson’s correlations. Set the method argument to use Kendall or Spearman correlations.

## [1] -0.47249928.7.3.1 Sampling function

Create a function that creates two variables with n observations and r correlation. Use the function cor.test() to give you p-values for the correlation.

sim_cor_test <- function(n = 100, r = 0) {

dat <- rnorm_multi(

n = n,

vars = 2,

r = r,

varnames = c("x", "y")

)

ctest <- cor.test(dat$x, dat$y)

ctest$p.value

}Once you’ve created your function, test it a few times, changing the values.

## [1] 0.0013548368.7.3.2 Calculate power

Now replicate the simulation 1000 times.

## [1] 0.965Compare to the value calcuated by the pwr package.

##

## approximate correlation power calculation (arctangh transformation)

##

## n = 50

## r = 0.5

## sig.level = 0.05

## power = 0.9669813

## alternative = two.sided8.8 Example

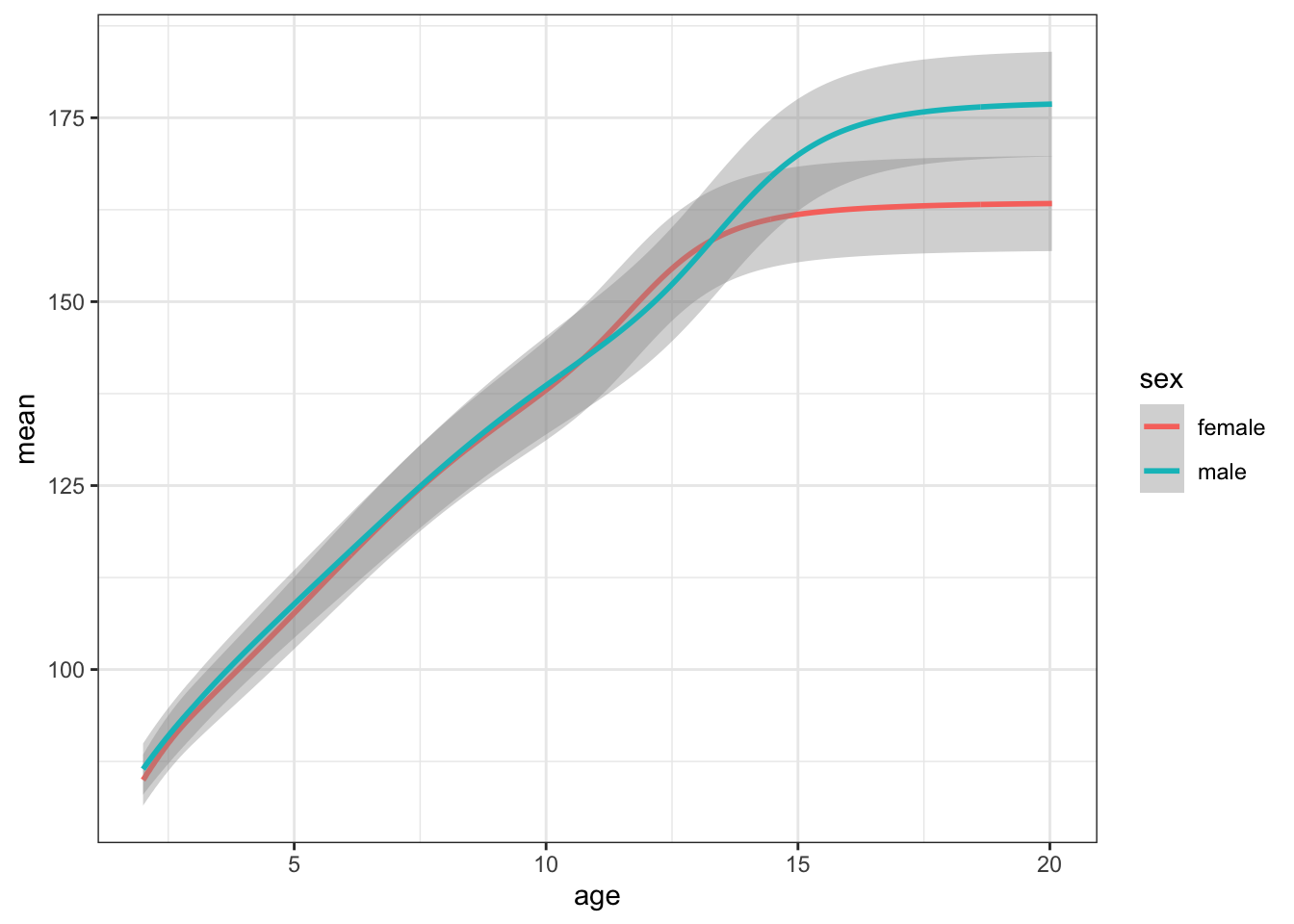

This example uses the Growth Chart Data Tables from the US CDC. The data consist of height in centimeters for the z-scores of –2, -1.5, -1, -0.5, 0, 0.5, 1, 1.5, and 2 by sex (1=male; 2=female) and half-month of age (from 24.0 to 240.5 months).

8.8.1 Load & wrangle

We have to do a little data wrangling first. Have a look at the data after you import it and relabel Sex to male and female instead of 1 and 2. Also convert Agemos (age in months) to years. Relabel the column 0 as mean and calculate a new column named sd as the difference between columns 1 and 0.

##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## Sex = col_character(),

## Agemos = col_character(),

## `-2` = col_double(),

## `-1.5` = col_double(),

## `-1` = col_double(),

## `-0.5` = col_double(),

## `0` = col_double(),

## `0.5` = col_double(),

## `1` = col_double(),

## `1.5` = col_double(),

## `2` = col_double()

## )8.8.2 Plot

Plot your new data frame to see how mean height changes with age for boys and girls.

ggplot(height_age, aes(age, mean, color = sex)) +

geom_smooth(aes(ymin = mean - sd,

ymax = mean + sd),

stat="identity")

8.8.3 Simulate a population

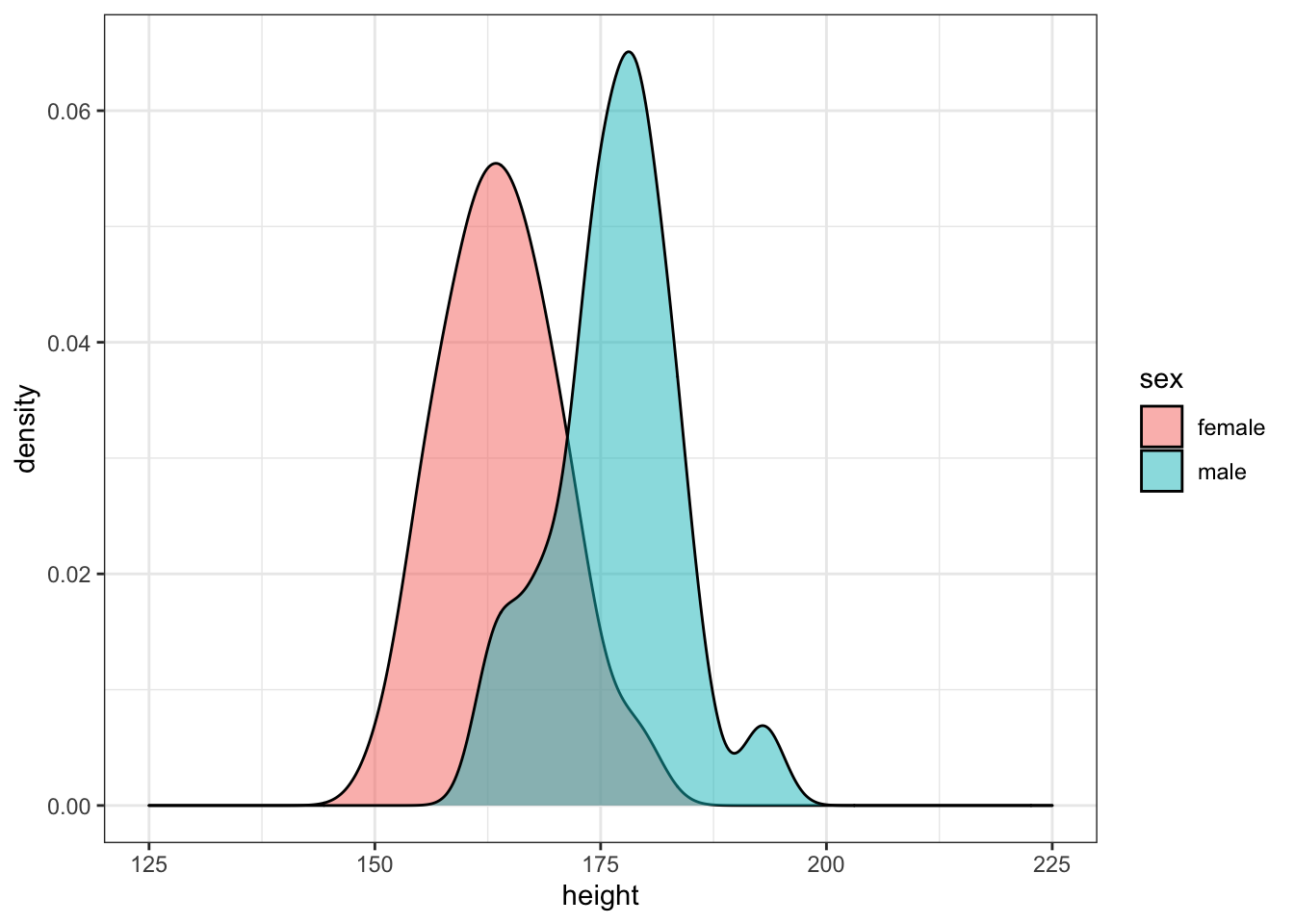

Simulate 50 random male heights and 50 random female heights for 20-year-olds using the rnorm() function and the means and SDs from the height_age table. Plot the data.

age_filter <- 20

m <- filter(height_age, age == age_filter, sex == "male")

f <- filter(height_age, age == age_filter, sex == "female")

sim_height <- tibble(

male = rnorm(50, m$mean, m$sd),

female = rnorm(50, f$mean, f$sd)

) %>%

gather("sex", "height", male:female)

ggplot(sim_height) +

geom_density(aes(height, fill = sex), alpha = 0.5) +

xlim(125, 225)

Run the simulation above several times, noting how the density plot changes. Try changing the age you’re simulating.

8.8.4 Analyse simulated data

Use the sim_t_ind(n, m1, sd1, m2, sd2) function we created above to generate one simulation with a sample size of 50 in each group using the means and SDs of male and female 14-year-olds.

age_filter <- 14

m <- filter(height_age, age == age_filter, sex == "male")

f <- filter(height_age, age == age_filter, sex == "female")

sim_t_ind(50, m$mean, m$sd, f$mean, f$sd)## [1] 0.00020269268.8.5 Replicate simulation

Now replicate this 1e4 times using the replicate() function. This function will save the returned p-values in a list (my_reps). We can then check what proportion of those p-values are less than our alpha value. This is the power of our test.

my_reps <- replicate(1e4, sim_t_ind(50, m$mean, m$sd, f$mean, f$sd))

alpha <- 0.05

power <- mean(my_reps < alpha)

power## [1] 0.64318.8.6 One-tailed prediction

This design has about 65% power to detect the sex difference in height (with a 2-tailed test). Modify the sim_t_ind function for a 1-tailed prediction.

You could just set alternative equal to “greater” in the function, but it might be better to add the alt argument to your function (giving it the same default value as t.test) and change the value of alternative in the function to alt.

sim_t_ind <- function(n, m1, sd1, m2, sd2, alt = "two.sided") {

v1 <- rnorm(n, m1, sd1)

v2 <- rnorm(n, m2, sd2)

t_ind <- t.test(v1, v2, paired = FALSE, alternative = alt)

return(t_ind$p.value)

}

alpha <- 0.05

my_reps <- replicate(1e4, sim_t_ind(50, m$mean, m$sd, f$mean, f$sd, "greater"))

mean(my_reps < alpha)## [1] 0.75548.8.7 Range of sample sizes

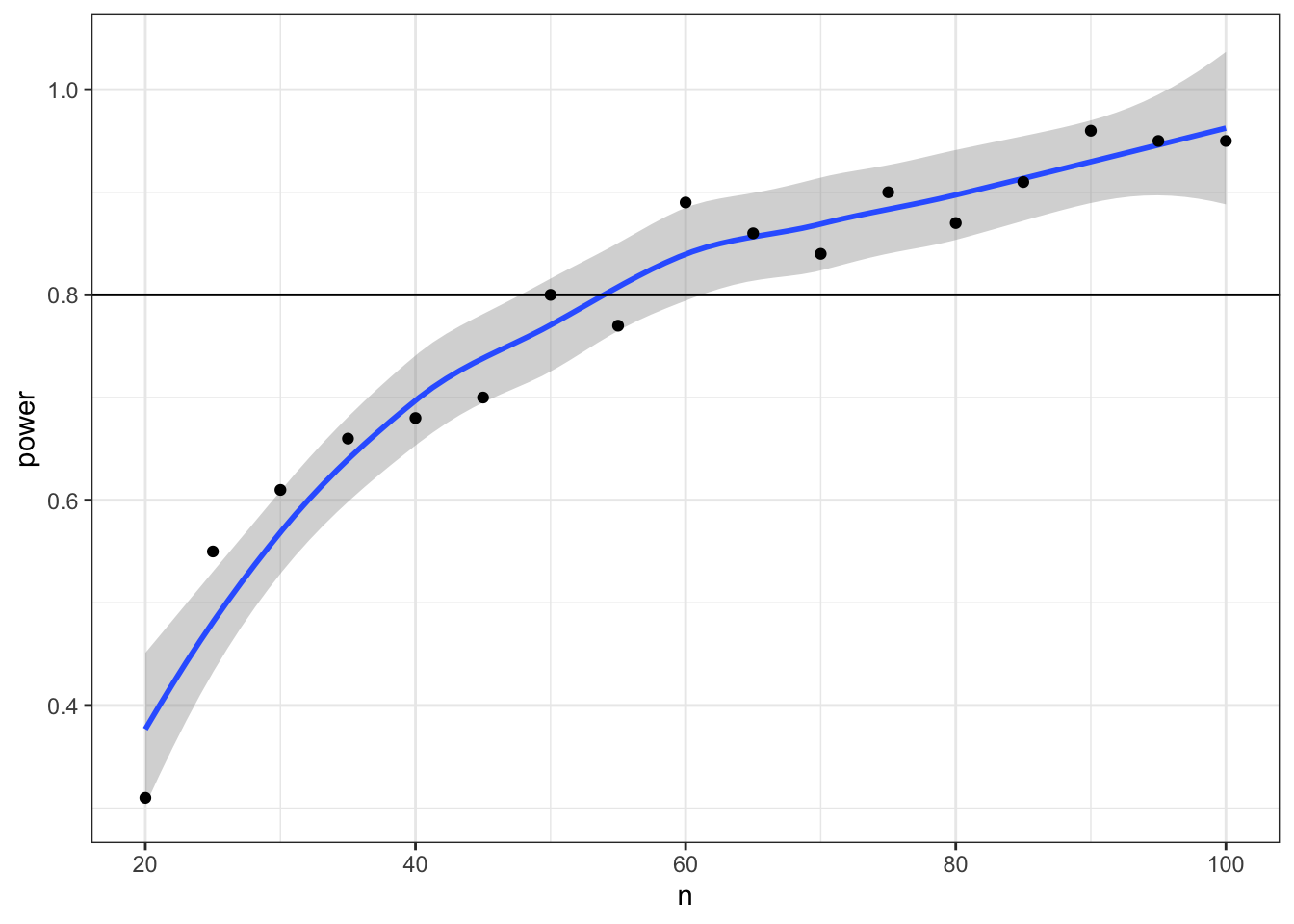

What if we want to find out what sample size will give us 80% power? We can try trial and error. We know the number should be slightly larger than 50. But you can search more systematically by repeating your power calculation for a range of sample sizes.

This might seem like overkill for a t-test, where you can easily look up sample size calculators online, but it is a valuable skill to learn for when your analyses become more complicated.

Start with a relatively low number of replications and/or more spread-out samples to estimate where you should be looking more specifically. Then you can repeat with a narrower/denser range of sample sizes and more iterations.

# make another custom function to return power

pwr_func <- function(n, reps = 100, alpha = 0.05) {

ps <- replicate(reps, sim_t_ind(n, m$mean, m$sd, f$mean, f$sd, "greater"))

mean(ps < alpha)

}

# make a table of the n values you want to check

power_table <- tibble(

n = seq(20, 100, by = 5)

) %>%

# run the power function for each n

mutate(power = map_dbl(n, pwr_func))

# plot the results

ggplot(power_table, aes(n, power)) +

geom_smooth() +

geom_point() +

geom_hline(yintercept = 0.8)## `geom_smooth()` using method = 'loess' and formula 'y ~ x'

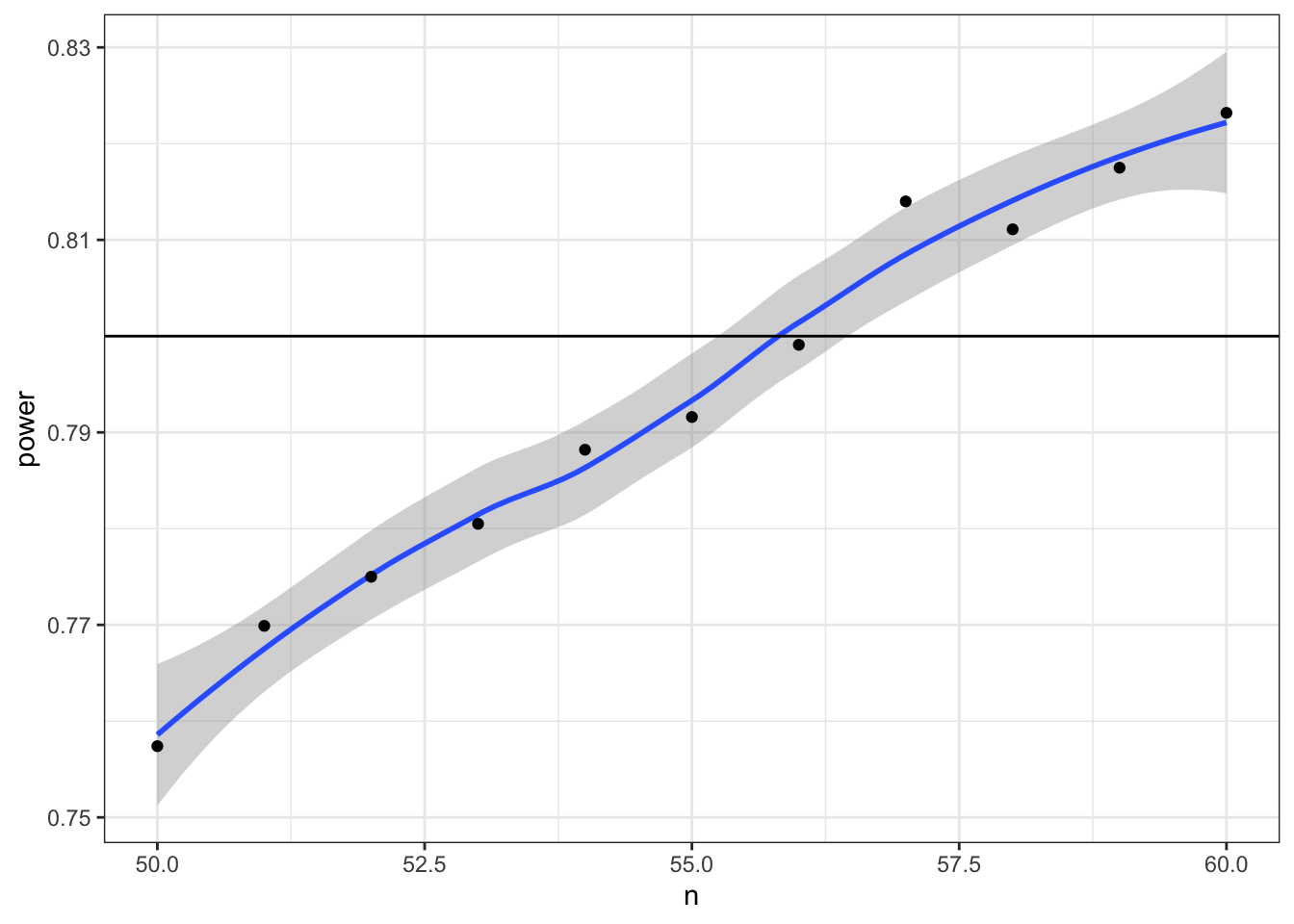

Now we can narrow down our search to values around 55 (plus or minus 5) and increase the number of replications from 1e3 to 1e4.

power_table <- tibble(

n = seq(50, 60)

) %>%

mutate(power = map_dbl(n, pwr_func, reps = 1e4))

ggplot(power_table, aes(n, power)) +

geom_smooth() +

geom_point() +

geom_hline(yintercept = 0.8)## `geom_smooth()` using method = 'loess' and formula 'y ~ x'

8.9 Glossary

| term | definition |

|---|---|

| alpha | The cutoff value for making a decision to reject the null hypothesis |

| beta | The false negative rate we accept for a statistical test. |

| binomial distribution | The distribution of data where each observation can have one of two outcomes, like success/failure, yes/no or head/tails. |

| bivariate normal | Two normally distributed vectors that have a specified correlation with each other. |

| confidence interval | A type of interval estimate used to summarise a given statistic or measurement where a proportion of intervals calculated from the sample(s) will contain the true value of the statistic. |

| correlation | The relationship two vectors have to each other. |

| covariance matrix | Parameters showing how a set of vectors vary and are correlated. |

| discrete | Data that can only take certain values, such as integers. |

| effect size | The difference between the effect in your data and the null effect (usually a chance value) |

| effect | Some measure of your data, such as the mean value, or the number of standard deviations the mean differs from a chance value. |

| false negative | When a test concludes there is no effect when there really is an effect |

| false positive | When a test concludes there is an effect when there really is no effect |

| function | A named section of code that can be reused. |

| nhst | Null Hypothesis Signficance Testing |

| normal distribution | A symmetric distribution of data where values near the centre are most probable. |

| null effect | An outcome that does not show an otherwise expected effect. |

| p value | The probability of seeing an effect at least as extreme as what you have, if the real effect was the value you are testing against (e.g., a null effect) |

| parameter | A value that describes a distribution, such as the mean or SD |

| poisson distribution | A distribution that models independent events happening over a unit of time |

| power | The probability of rejecting the null hypothesis when it is false. |

| probability | A number between 0 and 1 where 0 indicates impossibility of the event and 1 indicates certainty |

| sesoi | Smallest Effect Size of Interest: the smallest effect that is theoretically or practically meaningful |

| significant | The conclusion when the p-value is less than the critical alpha. |

| simulation | Generating data from summary parameters |

| true positive | When a test concludes there is an effect when there is really is an effect |

| type i error | A false positive; When a test concludes there is an effect when there is really is no effect |

| type ii error | A false negative; When a test concludes there is no effect when there is really is an effect |

| uniform distribution | All numbers in the range have an equal probability of being sampled |

| univariate | Relating to a single variable. |

E References

DeBruine, Lisa M, and Dale J Barr. 2019. “Understanding Mixed Effects Models Through Data Simulation,” June. https://doi.org/10.31234/osf.io/xp5cy.

Lakens, Daniel, and Aaron R Caldwell. 2019. “Simulation-Based Power-Analysis for Factorial Anova Designs,” May. https://doi.org/10.31234/osf.io/baxsf.

Lakens, Daniël, Anne M. Scheel, and Peder M. Isager. 2018. “Equivalence Testing for Psychological Research: A Tutorial.” Advances in Methods and Practices in Psychological Science 1 (2): 259–69. https://doi.org/10.1177/2515245918770963.

Morey, Richard D., Rink Hoekstra, Jeffrey N. Rouder, Michael D. Lee, and Eric-Jan Wagenmakers. 2016. “The Fallacy of Placing Confidence in Confidence Intervals.” Psychonomic Bulletin & Review 23 (1): 103–23. https://doi.org/10.3758/s13423-015-0947-8.