Appendix 1b: Example Code (no tidyverse)

Understanding mixed effects models through data simulation

Lisa M. DeBruine & Dale J. Barr

Source:vignettes/appendix1b_example_code.Rmd

appendix1b_example_code.RmdDownload the .Rmd for this example

This script provides equivalent code to the version using pipes and functions from the tidyverse. While this code may be of interest to people who want to learn more about the computations that generate correlated data, it will be more difficult to extend to more complicated designs.

Simulate data with crossed random factors

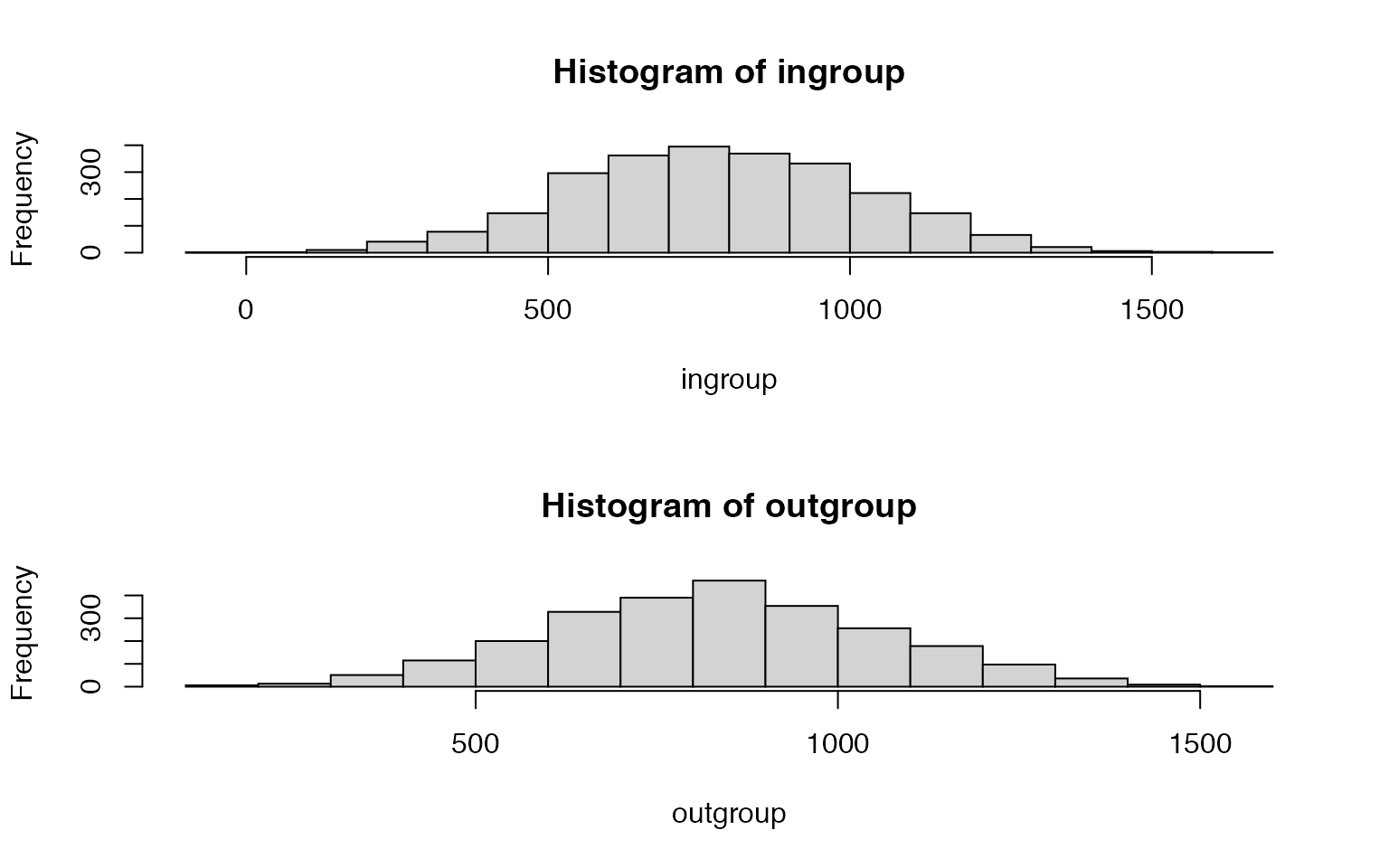

In this hypothetical study, subjects classify the emotional expressions of faces as quickly as possible, and we use their response time as the primary dependent variable. Let’s imagine that the faces are of two types: either from the subject’s ingroup or from an outgroup. For simplicity, we further assume that each face appears only once in the stimulus set. The key question is whether there is any difference in classification speed across the type of face.

The important parts of the design are:

- Random factor 1: subjects (associated variables will be prefixed by

Tortau) - Random factor 2: faces (associated variables will be prefixed by

Ooromega) - Fixed factor 1: category (level = ingroup, outgroup)

- within subject: subjects see both ingroup and outgroup faces

- between face: each face is either ingroup or outgroup

Establish the data-generating parameters

# set all data-generating parameters

beta_0 <- 800 # intercept; i.e., the grand mean

beta_1 <- 50 # slope; i.e, effect of category

omega_0 <- 80 # by-item random intercept sd

tau_0 <- 100 # by-subject random intercept sd

tau_1 <- 40 # by-subject random slope sd

rho <- .2 # correlation between intercept and slope

sigma <- 200 # residual (error) sdSimulate the sampling process

# set number of subjects and items

n_subj <- 100 # number of subjects

n_ingroup <- 25 # number of items in ingroup

n_outgroup <- 25 # number of items in outgroupSimulate the sampling of subjects

# simulate a sample of subjects

# calculate random intercept / random slope covariance

covar <- rho * tau_0 * tau_1

# put values into variance-covariance matrix

cov_mx <- matrix(

c(tau_0^2, covar,

covar, tau_1^2),

nrow = 2, byrow = TRUE)

# generate the by-subject random effects

subject_rfx <- MASS::mvrnorm(n = n_subj,

mu = c(T_0s = 0, T_1s = 0),

Sigma = cov_mx)

# combine with subject IDs

subjects <- data.frame(subj_id = seq_len(n_subj),

subject_rfx)Check your values

data.frame(

parameter = c("omega_0", "tau_0", "tau_1", "rho"),

value = c(omega_0, tau_0, tau_1, rho),

simulated = c(

sd(items$O_0i),

sd(subjects$T_0s),

sd(subjects$T_1s),

cor(subjects$T_0s, subjects$T_1s)

)

)## parameter value simulated

## 1 omega_0 80.0 68.7759151

## 2 tau_0 100.0 91.6318426

## 3 tau_1 40.0 44.5699772

## 4 rho 0.2 0.1916702Simulate trials (encounters)

# cross subject and item IDs; add an error term

trials <- expand.grid(subj_id = subjects$subj_id,

item_id = items$item_id)

trials$sigma = rnorm(nrow(trials), mean = 0, sd = sigma)

# join subject and item tables

joined <- merge(trials, subjects, by = "subj_id")

dat_sim <- merge(joined, items, by = "item_id")Data simulation function

Once you’ve tested your data generating code above, put it into a function so you can run it repeatedly. We combined a few steps in calculating Sigma for the correlated values.

# set up the custom data simulation function

my_sim_data <- function(

n_subj = 100, # number of subjects

n_ingroup = 25, # number of items in ingroup

n_outgroup = 25, # number of items in outgroup

beta_0 = 800, # grand mean

beta_1 = 50, # effect of category

omega_0 = 80, # by-item random intercept sd

tau_0 = 100, # by-subject random intercept sd

tau_1 = 40, # by-subject random slope sd

rho = 0.2, # correlation between intercept and slope

sigma = 200 # residual (standard deviation)

) {

# simulate a sample of items

items <- data.frame(

item_id = seq_len(n_ingroup + n_outgroup),

category = rep(c("ingroup", "outgroup"), c(n_ingroup, n_outgroup)),

X_i = rep(c(-0.5, 0.5), c(n_ingroup, n_outgroup)),

O_0i = rnorm(n = n_ingroup + n_outgroup, mean = 0, sd = omega_0)

)

# simulate subjects

Sigma <- matrix(c(tau_0^2, tau_0 * tau_1 * rho,

tau_0 * tau_1 * rho, tau_1^2),

nrow = 2, byrow = TRUE)

S <- MASS::mvrnorm(n_subj, c(T_0s = 0, T_1s = 0), Sigma)

subjects <- data.frame(subj_id = 1:n_subj, S)

# cross subject and item IDs; add an error term

trials <- expand.grid(subj_id = subjects$subj_id,

item_id = items$item_id)

trials$sigma <- rnorm(nrow(trials), mean = 0, sd = sigma)

# join subject and item tables

joined <- merge(trials, subjects, by = "subj_id")

dat_sim <- merge(joined, items, by = "item_id")

# calculate the response variable

dat_sim$RT <- beta_0 + dat_sim$O_0i + dat_sim$T_0s +

(beta_1 + dat_sim$T_1s) * dat_sim$X_i + dat_sim$sigma

dat_sim

}Analyze the simulated data

# fit a linear mixed-effects model to data

mod_sim <- lmer(RT ~ 1 + X_i + (1 | item_id) + (1 + X_i | subj_id),

data = dat_sim)

summary(mod_sim, corr = FALSE)## Linear mixed model fit by REML. t-tests use Satterthwaite's method [

## lmerModLmerTest]

## Formula: RT ~ 1 + X_i + (1 | item_id) + (1 + X_i | subj_id)

## Data: dat_sim

##

## REML criterion at convergence: 67746.6

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -3.6906 -0.6743 -0.0029 0.6791 3.5866

##

## Random effects:

## Groups Name Variance Std.Dev. Corr

## subj_id (Intercept) 8219 90.66

## X_i 2683 51.80 0.10

## item_id (Intercept) 4467 66.83

## Residual 41399 203.47

## Number of obs: 5000, groups: subj_id, 100; item_id, 50

##

## Fixed effects:

## Estimate Std. Error df t value Pr(>|t|)

## (Intercept) 807.72 13.41 114.96 60.238 <2e-16 ***

## X_i 47.91 20.43 54.20 2.346 0.0227 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

# get a table of results

sumry <- summary(mod_sim, corr = FALSE)

ffx <- fixef(mod_sim) # fixed effects

irfx <- attr(sumry$varcor$item_id, "stddev") # random effects for items

srfx <- attr(sumry$varcor$subj_id, "stddev") # random effects for subjects

rc <- attr(sumry$varcor$subj_id, "correlation")[1, 2] # rho estimate

res <- sigma(mod_sim) # residual error

data.frame(

parameter = c("beta_0", "beta_1", "omega_0", "tau_0", "tau_1", "rho", "sigma"),

value = c(beta_0, beta_1, omega_0, tau_0, tau_1, rho, sigma),

estimate = c(ffx[["(Intercept)"]],

ffx[["X_i"]],

irfx[["(Intercept)"]],

srfx[["(Intercept)"]],

srfx[["X_i"]],

rc,

res),

term = c(rownames(sumry$coefficients), rep(NA, 5)),

std.error = c(sumry$coefficients[, 2], rep(NA, 5)),

statistic = c(sumry$coefficients[, 4], rep(NA, 5)),

df = c(sumry$coefficients[, 3], rep(NA, 5)),

p.value = c(sumry$coefficients[, 5], rep(NA, 5))

)## parameter value estimate term std.error statistic df

## 1 beta_0 800.0 807.72240229 (Intercept) 13.40893 60.237635 114.96386

## 2 beta_1 50.0 47.91285362 X_i 20.42749 2.345508 54.19737

## 3 omega_0 80.0 66.83329418 <NA> NA NA NA

## 4 tau_0 100.0 90.65646942 <NA> NA NA NA

## 5 tau_1 40.0 51.79613859 <NA> NA NA NA

## 6 rho 0.2 0.09632442 <NA> NA NA NA

## 7 sigma 200.0 203.46675530 <NA> NA NA NA

## p.value

## 1 8.380327e-89

## 2 2.269067e-02

## 3 NA

## 4 NA

## 5 NA

## 6 NA

## 7 NACalculate Power

You can wrap up the data generating function and the analysis code in a new function (single_run) that returns a table of the analysis results, and optionally saves this info to a file if you set a filename.

# set up the power function

single_run <- function(filename = NULL, ...) {

# ... is a shortcut that forwards any arguments to my_sim_data()

dat_sim <- my_sim_data(...)

mod_sim <- lmer(RT ~ X_i + (1 | item_id) + (1 + X_i | subj_id),

dat_sim)

# get a table of results

sumry <- summary(mod_sim, corr = FALSE)

ffx <- fixef(mod_sim)

irfx <- attr(sumry$varcor$item_id, "stddev")

srfx <- attr(sumry$varcor$subj_id, "stddev")

sim_results <- data.frame(

parameter = c("beta_0", "beta_1", "omega_0", "tau_0", "tau_1", "rho", "sigma"),

value = c(beta_0, beta_1, omega_0, tau_0, tau_1, rho, sigma),

estimate = c(ffx[["(Intercept)"]],

ffx[["X_i"]],

irfx[["(Intercept)"]],

srfx[["(Intercept)"]],

srfx[["X_i"]],

attr(sumry$varcor$subj_id, "correlation")[1, 2],

sigma(mod_sim)),

term = c(rownames(sumry$coefficients), rep(NA, 5)),

std.error = c(sumry$coefficients[, 2], rep(NA, 5)),

statistic = c(sumry$coefficients[, 4], rep(NA, 5)),

df = c(sumry$coefficients[, 3], rep(NA, 5)),

p.value = c(sumry$coefficients[, 5], rep(NA, 5))

)

# append the results to a file if filename is set

if (!is.null(filename)) {

append <- file.exists(filename) # append if the file exists

write.table(sim_results, filename, sep = ",",

row.names = FALSE,

append = append,

col.names = !append)

}

# return the tidy table

sim_results

}

# run one model with default parameters

single_run()## parameter value estimate term std.error statistic df

## 1 beta_0 800.0 812.9633813 (Intercept) 14.30341 56.837023 116.28041

## 2 beta_1 50.0 72.8248327 X_i 21.40344 3.402483 51.13854

## 3 omega_0 80.0 71.6541083 <NA> NA NA NA

## 4 tau_0 100.0 96.9226766 <NA> NA NA NA

## 5 tau_1 40.0 39.3914381 <NA> NA NA NA

## 6 rho 0.2 0.1736067 <NA> NA NA NA

## 7 sigma 200.0 199.5160212 <NA> NA NA NA

## p.value

## 1 1.103124e-86

## 2 1.304604e-03

## 3 NA

## 4 NA

## 5 NA

## 6 NA

## 7 NA

# run one model with new parameters

single_run(n_ingroup = 50, n_outgroup = 45, beta_1 = 20)## parameter value estimate term std.error statistic df

## 1 beta_0 800.0 797.66534765 (Intercept) 13.70658 58.195791 164.9406

## 2 beta_1 50.0 19.53176608 X_i 16.08609 1.214202 103.9661

## 3 omega_0 80.0 73.13826915 <NA> NA NA NA

## 4 tau_0 100.0 112.78709472 <NA> NA NA NA

## 5 tau_1 40.0 40.14726094 <NA> NA NA NA

## 6 rho 0.2 -0.03579995 <NA> NA NA NA

## 7 sigma 200.0 199.40746820 <NA> NA NA NA

## p.value

## 1 7.262043e-112

## 2 2.274214e-01

## 3 NA

## 4 NA

## 5 NA

## 6 NA

## 7 NATo get an accurate estimation of power, you need to run the simulation many times. We use 100 here as an example, but your results are more accurate the more replications you run. This will depend on the specifics of your analysis, but we recommend at least 1000 replications.

# run simulations

reps <- 100

sims <- replicate(reps, single_run(), simplify = FALSE)

sims <- lapply(sims, as.data.frame)

sims <- do.call("rbind", sims)Alternatively, you can save the simulations to a file and read from that file in subsequent runs.

filename <- "sims/sims_base.csv" # change for new analyses

if (!file.exists(filename)) {

reps <- 100

trash <- replicate(reps, single_run(filename))

}

# read saved simulation data

sims <- read.csv(filename)

# calculate mean estimates and power for specified alpha

alpha <- 0.05

fcat <- sims[sims$term == "X_i", ]

power <- mean(fcat$p.value < alpha)

mean_estimate <- mean(fcat$estimate)

mean_se <- mean(fcat$std.error)The power is NA with a mean estimate for the effect of category of NA and a mean standard error of NA.

Compare to ANOVA

One way many researchers would normally analyse data like this is by averaging each subject’s reaction times across the ingroup and outgroup stimuli and compare them using a paired-samples t-test or ANOVA (which is formally equivalent). Here, we use afex::aov_ez to analyse a version of our dataset that is aggregated by subject.

# aggregate by subject and analyze with ANOVA

dat_subj <- with(dat_sim, aggregate(RT, list(subj_id, category, X_i), mean))

names(dat_subj) <- c("subj_id", "category", "X_i", "RT")

afex::aov_ez(

id = "subj_id",

dv = "RT",

within = "category",

data = dat_subj

)## Anova Table (Type 3 tests)

##

## Response: RT

## Effect df MSE F ges p.value

## 1 category 1, 99 2997.37 38.29 *** .052 <.001

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '+' 0.1 ' ' 1Alternatively, you could aggregate by item, averaging all subjects’ scores for each item.

# aggregate by item and analyze with ANOVA

dat_item <- with(dat_sim, aggregate(RT, list(item_id, category, X_i), mean))

names(dat_item) <- c("item_id", "category", "X_i", "RT")

afex::aov_ez(

id = "item_id",

dv = "RT",

between = "category",

data = dat_item

)## Anova Table (Type 3 tests)

##

## Response: RT

## Effect df MSE F ges p.value

## 1 category 1, 48 4880.55 5.88 * .109 .019

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '+' 0.1 ' ' 1We can create a power analysis function that simulates data using our data-generating process from my_sim_data(), creates these two aggregated datasets, and analyses them with ANOVA. We’ll just return the p-values for the effect of category as we can calculate power as the percentage of these simulations that reject the null hypothesis.

# power function for ANOVA

my_anova_power <- function(...) {

dat_sim <- my_sim_data(...)

dat_subj <- with(dat_sim, aggregate(RT, list(subj_id, category, X_i), mean))

names(dat_subj) <- c("subj_id", "category", "X_i", "RT")

dat_item <- with(dat_sim, aggregate(RT, list(item_id, category, X_i), mean))

names(dat_item) <- c("item_id", "category", "X_i", "RT")

a_subj <- afex::aov_ez(id = "subj_id",

dv = "RT",

within = "category",

data = dat_subj)

suppressMessages(

# check contrasts message is annoying

a_item <- afex::aov_ez(

id = "item_id",

dv = "RT",

between = "category",

data = dat_item

)

)

list(

"subj" = a_subj$anova_table$`Pr(>F)`,

"item" = a_item$anova_table$`Pr(>F)`

)

}Run this function with the default parameters to determine the power each analysis has to detect an effect of category of 50 ms.

# run simulations and calculate power

reps <- 100

anova_sims <- replicate(reps, my_anova_power(), simplify = FALSE)

anova_sims <- lapply(anova_sims, as.data.frame)

anova_sims <- do.call("rbind", anova_sims)

alpha <- 0.05

power_subj <- mean(anova_sims$subj < alpha)

power_item <- mean(anova_sims$item < alpha)The by-subjects ANOVA has power of 0.93, while the by-items ANOVA has power of 0.52. This isn’t simply a consequence of within versus between design or the number of subjects versus items, but rather a consequence of the inflated false positive rate of some aggregated analyses.

Set the effect of category to 0 to calculate the false positive rate. This is the probability of concluding there is an effect when there is no actual effect in your population.

# run simulations and calculate the false positive rate

reps <- 100

anova_fp <- replicate(reps, my_anova_power(beta_1 = 0), simplify = FALSE)

anova_fp <- lapply(anova_fp, as.data.frame)

anova_fp <- do.call("rbind", anova_fp)

false_pos_subj <- mean(anova_fp$subj < alpha)

false_pos_item <- mean(anova_fp$item < alpha)

false_pos_subj## [1] 0.57

false_pos_item## [1] 0.05Ideally, your false positive rate will be equal to alpha, which we set here at 0.05. The by-subject aggregated analysis has a massively inflated false positive rate of 0.57, while the by-item aggregated analysis has a closer-to-nominal false positive rate of 0.05. This is not a mistake, but a consequence of averaging items and analysing a between-item factor. Indeed, this problem with false positives is one of the most compelling reasons to analyze cross-classified data using mixed effects models.